Social human-human interactions rely on various communication methods – verbal communication, and physical behavior. The CIMLab has developed a facial-affect estimation system for implementation for human-robot interactions, allowing robots to better understand and interpret human affect.

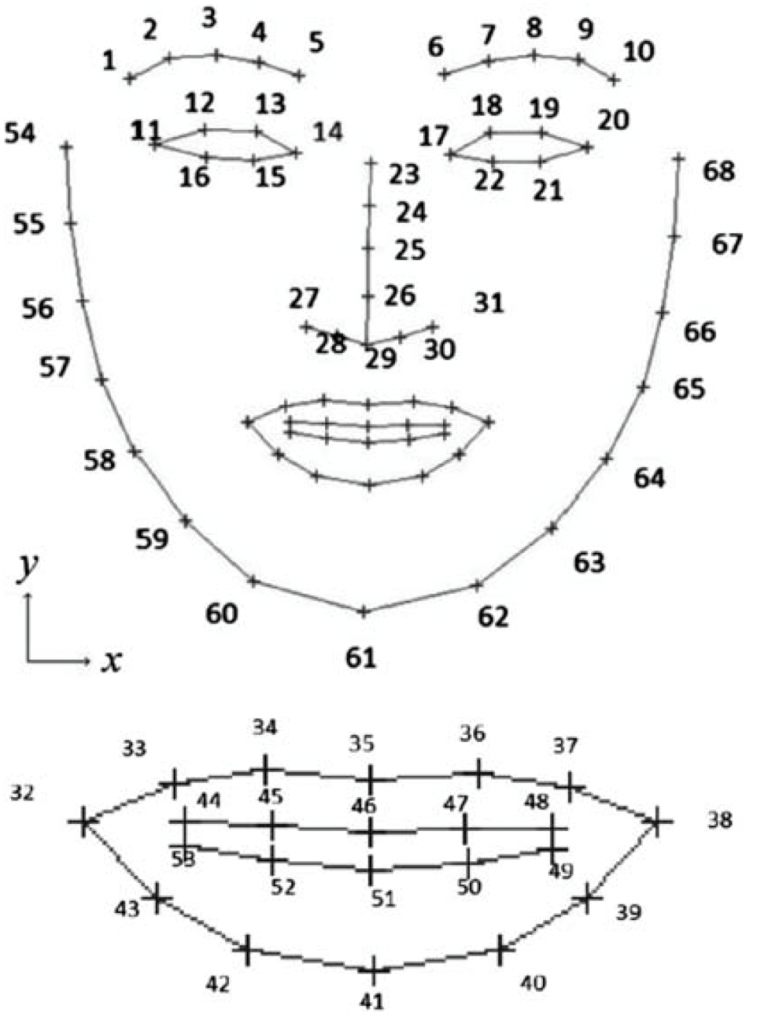

The proposed methodology comprises of two main modules: (i) facial feature point detection, and (ii) affect recognition. Facial point detection marks a set of facial-feature points representing landmarks on a person’s face in an image. The feature points are then fitted with respect to a face shape model in order to account for variations in the alignment and scale of a person’s head, Figure 1.

Figure 1. Locations of Feature Points in the Face Shape Model

Figure 1. Locations of Feature Points in the Face Shape Model

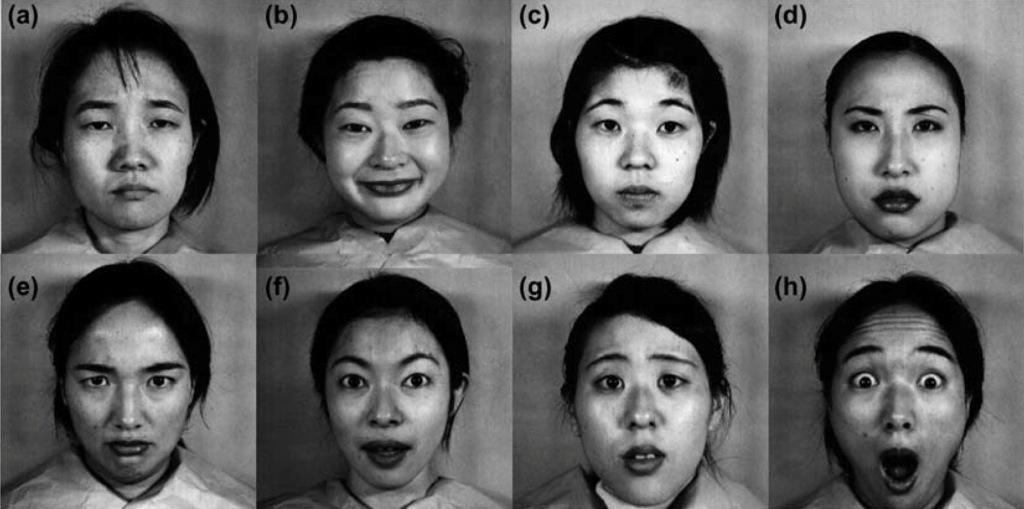

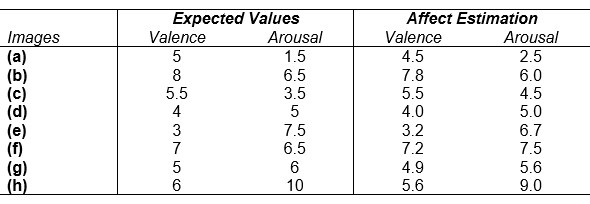

The location of the feature points is then provided to the affect estimation system, which determines the corresponding valence and arousal values for a particular facial expression. The novelty of our proposed affect estimation system is the inclusion of non-dimensionalized facial expression parameters (FEPs) to provide the ability to successfully estimate affect from any facial expression without the need for prior expressions as a baseline. Experiments were conducted demonstrating the system’s ability to successfully estimate facial affect from a variety of individuals exhibiting both posed and natural facial expressions, Figure 2.

Figure 2. Examples of Facial Expression Images Used in Experiments

Figure 2. Examples of Facial Expression Images Used in Experiments

Table 1. Affect Values for Images in Figure 2

Table 1. Affect Values for Images in Figure 2